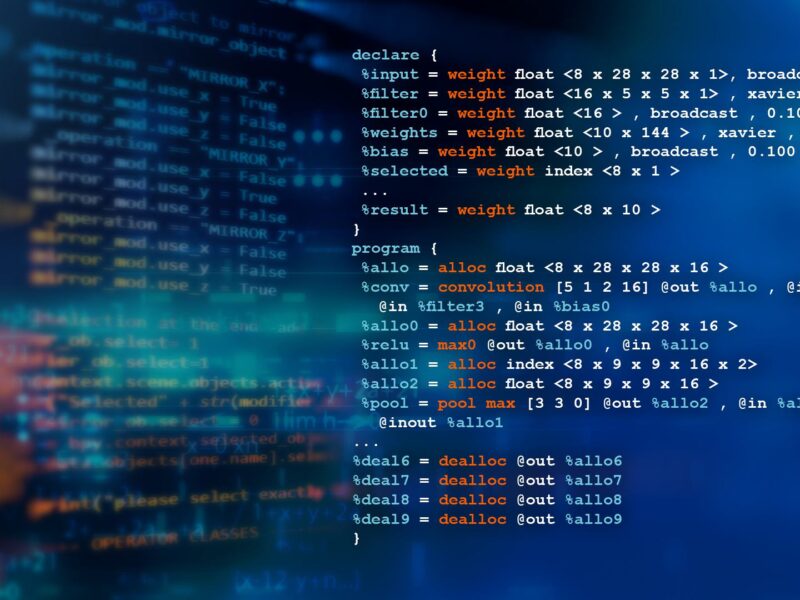

MCU-based implementation of Glow neural network compiler

NXP claims that this support provides the industry’s first NN compiler implementation for higher performance with low memory footprint on NXP’s i.MX RT crossover MCUs.

Originally developed by Facebook, Glow is able to integrate target-specific optimizations. NXP took advantage of this ability using NN operator libraries for Arm Cortex-M cores and the Cadence Tensilica HiFi 4 DSP. This combination of hardware and software maximizes the inferencing performance of the i.MX RT685 and i.MX RT1050 and RT1060. The capability is also integrated into NXP’s eIQ Machine Learning Software Development Environment, which is freely available within NXP’s MCUXpresso SDK.

As an NN compiler, Glow takes in an unoptimized neural network and generates highly optimized code. Typically neural network model processing uses a just-in-time compilation, which requires better performance and needs more memory overhead. Directly running optimized code, like Glow does, reduces both processing and memory requirements. NXP been active in the Glow open source community to assist in the acceptance of new Glow features.

“The standard, out-of-the-box version of Glow from GitHub is device agnostic to give users the flexibility to compile neural network models for basic architectures of interest, including the Arm Cortex-A and Cortex-M cores, as well as RISC-V architectures,” said Dwarak Rajagopal, Software Engineering Manager at Facebook. “By using purpose-built software libraries that exploit the compute elements of their MCUs and delivering a 2-3x performance increase, NXP has demonstrated the wide-ranging benefits of using the Glow NN compiler for machine learning applications, from high-end cloud-based machines to low-cost embedded platforms.”

NXP’s edge intelligence environment solution for ML provides building blocks for efficient implementation of ML in edge devices. By merging Glow into eIQ software, ML developers can now use a high-performance framework that is scalable across NXP’s edge processing solutions, including the i.MX RT crossover MCUs and i.MX 8 application processors.

eIQ now includes inferencing support for both Glow and TensorFlow Lite, for which NXP routinely performs benchmarking activities to measure performance. MCU benchmarks include standard NN models, such as CIFAR-10. Using a CIFAR-10 model as an example, the benchmark data acquired by NXP shows how to leverage the performance advantage of the i.MX RT1060 device (with 600MHz Arm Cortex-M7), i.MX RT1170 device (with 1GHz Arm Cortex-M7), and i.MX RT685 device (with 600 MHz Cadence Tensilica HiFi 4 DSP).

NXP’s enablement for Glow is tightly coupled with the Neural Network Library (NNLib) that Cadence provides for its Tensilica HiFi 4 DSP delivering 4.8GMACs of performance. In the same CIFAR-10 example, NXP implementation of Glow achieves a 25x performance advantage by using this DSP to accelerate the NN operations.

NXP’s eIQ for Glow NN compiler is available now, delivered via MCUXpresso SDK for i.MX RT600 Crossover MCUs, as well as i.MX RT1050 and i.MX RT1060 crossover MCUs. eIQ for Glow NN compiler will be available for other NXP MCUs in the future.

More information

Related news

NXP introduces Wi-Fi 6 portfolio

Mouser and NXP publish eBook on AI

NXP S32K MCUs with ISELED from Mouser

NXP acquires mixed-signal semiconductor IP company

If you enjoyed this article, you will like the following ones: don't miss them by subscribing to :

eeNews on Google News

If you enjoyed this article, you will like the following ones: don't miss them by subscribing to :

eeNews on Google News