Imagination launches flexible neural network IP

The 2NX is designed for deployment in “leaf node” applications such as mobile surveillance, automotive and consumer systems and is designed to directly complement GPU cores. In a 16nm process technology the 2NX will occupy from less than1 square millimeter up to a few square millimeter.

Both learning/training of convolutional neural networks, which is a lengthy process, and inferencing of specific examples have tended to be done in the cloud…but for reasons of latency and power efficiency many applications need inferencing at least to be done on the leaf nodes. For example collision avoidance for drones and automobiles.

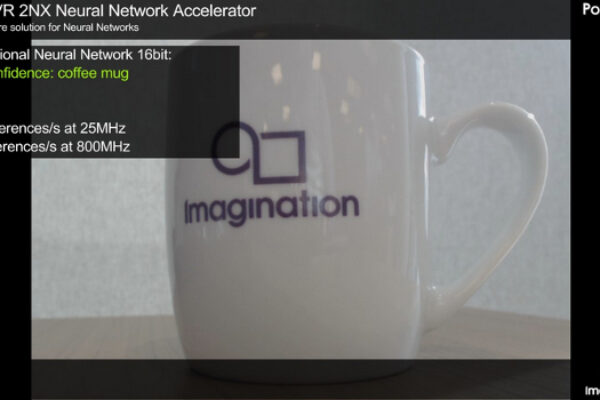

Block diagram of the PowerVR 2NX neural network accelerator IP core. Source: Imagination.

“Dedicated hardware for neural network acceleration will become a standard IP block on future SoCs just as CPUs and GPUs have done,” said Chris Longstaff, senior director of product and technology marketing, PowerVR, at Imagination, in a statement. “We are excited to bring to market the first full hardware accelerator to completely support a flexible approach to precision, enabling neural networks to be executed in the lowest power and bandwidth, whilst offering absolute performance and performance per square millimeter that outstrips competing solutions. The tools we provide will enable developers to get their networks up and running very quickly for a fast path to revenue.”

Next: Suitable for mobile

The PowerVR 2NX has been designed as a core for inferencing on a mobile SoC from the ground up. It is a scalable architecture that comes with up to 8 neural network compute engines. Each engine is capable of up to 128 16bit multiply accumulates per clock (MACs/clk) and 256 8bit MACs/clk, giving at the high end 1024 16bit and 2048 8bit MACs/clk.

Variable data precision can be used to reduce memory bandwidth and power consumption. Source: Imagination.

It has also been designed to be flexible in data type support. Some markets such as automotive mandate 16 bit support, whilst others take advantage of the benefits of lower precision. The 2NX supports 16, 12, 10, 8, 7, 6, 5, 4-bit data with per layer adjustment for both weights and activations and variable precision internal data formats. Floating point 32bit precision can be used where required, inside the accumulator with variable output precision. Imagination claims that a dedicated NN accelerator such as the 2NX provides an 8x performance and density improvement versus DSP-only solutions.

In addition, neural networks are traditionally bandwidth hungry, and the memory bandwidth requirements grow with the increase in size of neural network models. The ability of the PowerVR to tailor data resolution helps it to minimize bandwidth requirement, which in turn reduces power consumption.

There are kilobyte-sized SRAM accumulator and shared buffers within the core with the option to increase on-chip local memory to minimize off-chip data fetches and an optional memory management unit. The optional MMU also helps the 2NX work with complex operating systems such as Android and Linux. Applications will include smartphones with Android support for that.

How PowerVR 2NX sits within mobile SoC. Source: Imagination.

Imagination has made read and write formats compatible with established ISPs, GPUs and CPUs which also provides interoperability with other system components with minimum external processing and bandwidth. There is also support for interchange formats such as the neural network exchange format (NNEF) developed by the Khronos Group. The NNEF standard encapsulates neural network structure, data formats, commonly used operations (such as convolution, pooling, normalization, etc.) and formal network semantics.

To ease development Imagination has created an application programming interface for the NNA called DNN API. It is also compatible with CPUs and GPUs to allow prototyping on existing platforms and then transfer to NNA for speed up and power reduction.

Imagination has been working on the IP for about two years. “We are at the beta stage with a number of partners evaluating the core. We expect integration of the core within SoC designs in 1H18 and it to appear in silicon towards the end of 2018, maybe…depends on the speed of licencees,” said Longstaff.

Related links and articles:

https://caffe.berkeleyvision.org

News articles:

BrainChip launches neuromorphic hardware accelerator

Cambricon licenses Moortec’s 16nm in-chip monitor

Movidius upgrades VPU with on-chip neural compute

Huawei integrates AI in its latest mobile processor

iPhone X chip shows problems of Imagination

If you enjoyed this article, you will like the following ones: don't miss them by subscribing to :

eeNews on Google News

If you enjoyed this article, you will like the following ones: don't miss them by subscribing to :

eeNews on Google News